Multi Channel Correlation Filters

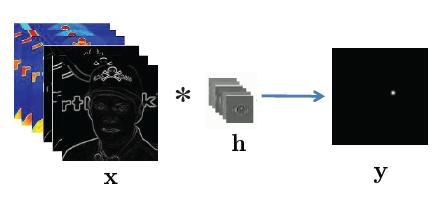

Modern descriptors like HOG and SIFT are now commonly used in vision for pattern detection within image and video. From a signal processing perspective this detection process can be efficiently posed as a correlation/ convolution between a multi-channel image and a multi-channel detector/filter which results in a singlechannel response map indicating where the pattern (e.g. object) has occurred. In this work we propose a novel framework for learning a multi-channel detector/filter efficiently in the frequency domain (both in terms of training time and memory footprint) which we refer to as a multichannel correlation filter. To demonstrate the effectiveness of our strategy, we evaluate it across a number of visual detection/ localization tasks where we: (i) exhibit superior performance to current state of the art correlation filters, and (ii) superior computational and memory efficiencies compared to state of the art spatial detectors.

- We propose an extension to canonical correlation filter theory that is able to efficiently handle multi-channel signals. Specifically, we show how when posed in the frequency domain the task of multi-channel correlation filter estimation forms a sparse banded linear system. Further, we demonstrate how our system can be solved much more efficiently than spatial domain methods.

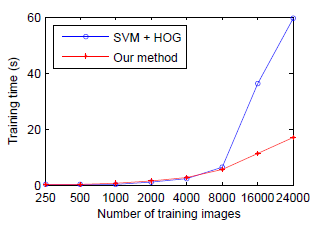

- We characterize theoretically and demonstrate empirically how our multi-channel correlation approach affords substantial memory savings when learning on multichannel signals. Specifically we demonstrate how our approach does not have a memory cost that is linear in the number of samples, allowing for substantial savings when learning detectors across large amounts of data.

- We apply our approach across a myriad of detection and localization tasks including: eye localization, car detection and pedestrian detection. We demonstrate: (i) superior performance to current state of the art single-channel correlation filters, and (ii) superior computational and memory efficiency in comparison to spatial detectors (e.g. linear SVM) with comparable detection performance.

An example of multi-channel correlation/convolution where one has a multi-channel image x correlated/convolved with a multi-channel filter h to give a single-channel response y. By posing this objective in the frequency domain, our multi-channel correlation filter approach attempts to give a computational & memory efficient strategy for estimating h given x and y.

Car detection demo

Eye detection demo

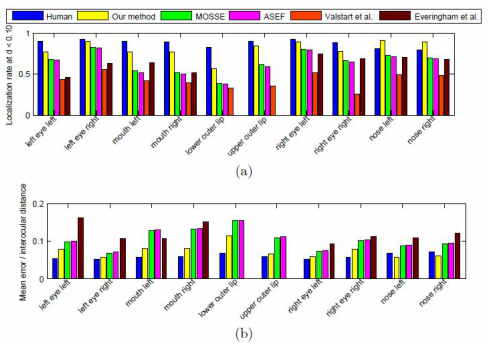

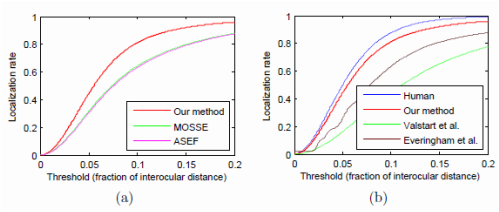

Facial landmark localization on LFW dataset (HOG channels)

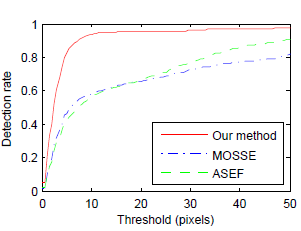

Car detection on MIT StreetScene dataset comparing with prior filters (HOG channels)

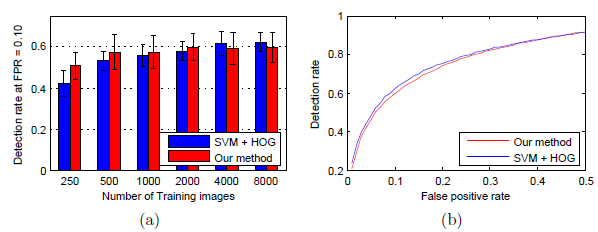

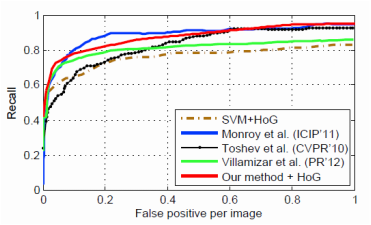

Pedestrian detection on Daimler dataset comparing with SVM (HOG channels)

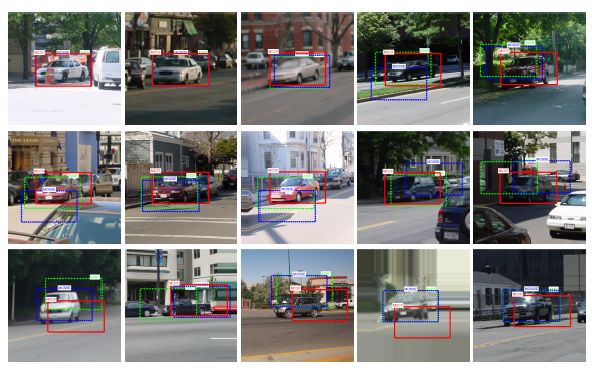

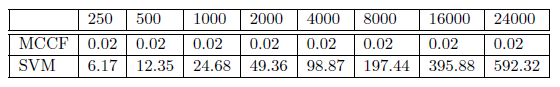

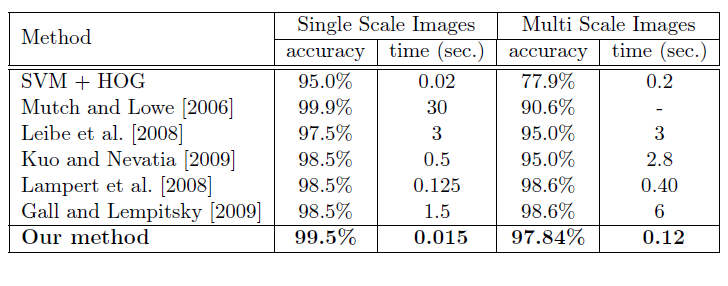

UIUC car detection results (HOG channels)

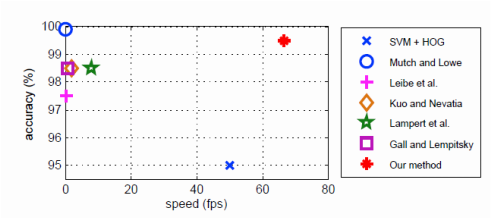

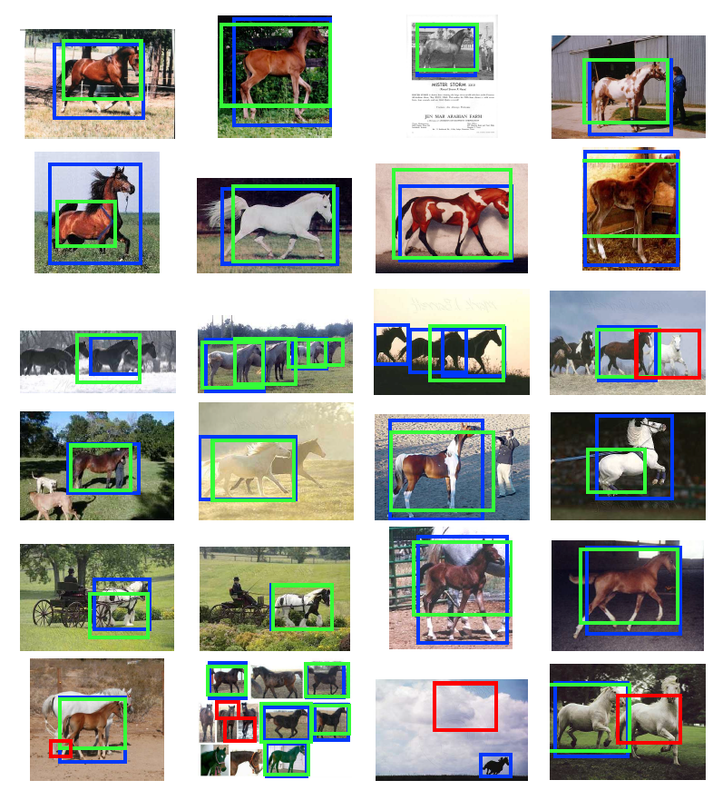

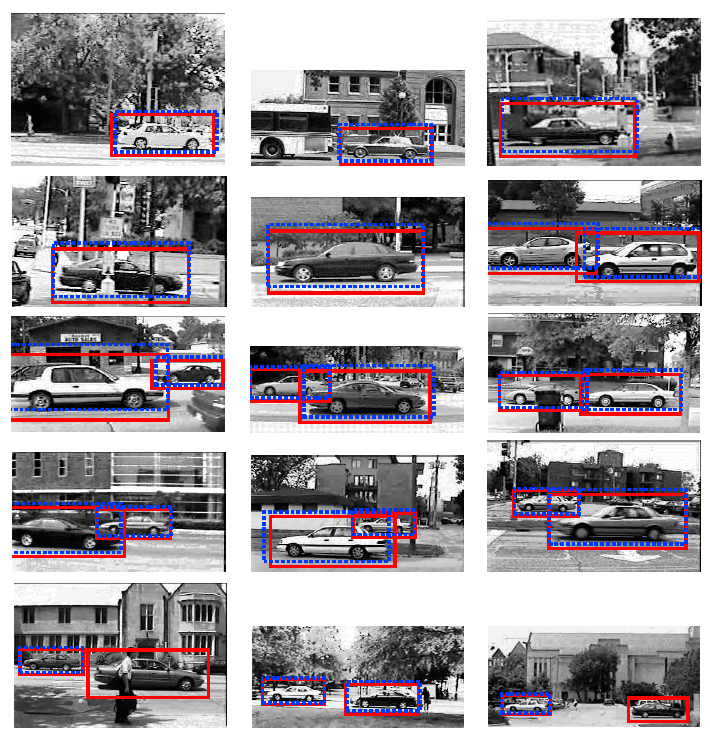

Detection results of our method over the UIUC cars dataset. The proposed method is able to

nd the cars in street images captured under uncontrolled circumstances with challenging intra-class variations, very textured background, extreme lighting and scale changes. The ground truth and the detected cars are respectively shown by the red and dashed blue boxes.